Containerless! How to Run WebAssembly Workloads on Kubernetes with Rust

WebAssembly (Wasm) is one of the most exciting and underestimated software technologies invented in recent times. It’s a binary instruction format for a stack-based virtual machine that aims to execute at native speeds with a memory-safe and secure sandbox. Wasm is portable, cross-platform, and language-agnostic—designed as a compilation target for languages. Though originally part of the open web platform, it has found use cases beyond the web. WebAssembly is now used in browsers, Node.js, Deno, Kubernetes, and IoT platforms.

You can learn more about WebAssembly at WebAssembly.org.

WebAssembly on Kubernetes

Though initially designed for the web, WebAssembly proved to be an ideal format for writing platform and language-agnostic applications. You may be aware of something similar in the container world—Docker containers. People, including Docker co-founder Solomon Hykes, recognized the similarity and acknowledged that WebAssembly is even more efficient since it’s fast, portable, and secure, running at native speeds. This means that you can use WebAssembly alongside containers as workloads on Kubernetes. Another WebAssembly initiative known as WebAssembly System Interface (WASI) along with the Wasmtime project make this possible.

If WASM+WASI existed in 2008, we wouldn't have needed to created Docker. That's how important it is. Webassembly on the server is the future of computing. A standardized system interface was the missing link. Let's hope WASI is up to the task! https://t.co/wnXQg4kwa4

— Solomon Hykes (@solomonstre) March 27, 2019

WebAssembly on Kubernetes is relatively new and has some rough edges at the moment, but it’s already proving to be revolutionary. Wasm workloads can be extremely fast as they can execute faster than a container takes to start. The workloads are sandboxed and hence much more secure than containers; they are way smaller in size due to the binary format than containers.

If you want to learn more about WASI, check out the original announcement from Mozilla.

Why Rust?

I previously wrote a blog post about why Rust is a great language for the future, Tl;Dr; Rust is secure and fast without the compromises of most modern languages, and Rust has the best ecosystem and tooling for WebAssembly. So Rust + Wasm makes it super secure and fast.

Enter Krustlet

Krustlet is a Kubelet written in Rust for WebAssembly workloads (written in any language). It listens for new pods assignments based on tolerations specified on the manifest and runs them. Since the default Kubernetes nodes cannot run Wasm workloads natively, you need a Kubelet that can, and this is where Krustlet comes in.

Run WebAssembly workloads on Kubernetes with Krustlet

Today, you will use Krustlet to run a Wasm workload written in Rust on Kubernetes.

Prerequisites

- Docker

- kubectl

- kind or another local kubernetes distribution

- Rust toolkit (Includes rustup, rustc, and cargo)

Preparing the cluster

First, you need to prepare a cluster and install Krustlet on the cluster to run WebAssembly on it. I’m using kind to run a local Kubernetes cluster; you can also use MiniKube, MicroK8s, or another Kubernetes distribution.

The first step is to create a cluster with the below command:

kind create cluster

Now you need to bootstrap Krustlet. For this, you will need kubectl installed and a kubeconfig that has access to create Secrets in the kube-system namespace and can approve CertificateSigningRequests. You can use these handy scripts from Krustlet to download and run the appropriate setup script for your OS:

# Setup for Linux/macOS

curl https://raw.githubusercontent.com/krustlet/krustlet/main/scripts/bootstrap.sh | /bin/bash

Now you can install and run Krustlet.

Download a binary release from the release page and run it. Download the appropriate version for your OS.

# Download for Linux

curl -O https://krustlet.blob.core.windows.net/releases/krustlet-v1.0.0-alpha.1-linux-amd64.tar.gz

tar -xzf krustlet-v1.0.0-alpha.1-linux-amd64.tar.gz

# Install for Linux

KUBECONFIG=~/.krustlet/config/kubeconfig \

./krustlet-wasi \

--node-ip=172.17.0.1 \

--node-name=krustlet \

--bootstrap-file=${HOME}/.krustlet/config/bootstrap.conf

Note: If you use Docker for Mac, the node-ip will be different. Follow the instructions from the Krustlet docs to figure out the IP. If you get the error krustlet-wasi cannot be opened because the developer cannot be verified, you can allow it with the allow anyway button found at System Preferences > Security & Privacy > General on macOS.

You should see a prompt to manually approve TLS certs since the serving certs Krustlet uses must be manually approved. Open a new terminal and run the below command. The hostname will be shown in the prompt from the Krustlet server.

kubectl certificate approve <hostname>-tls

This will be required only for the first time when you start the Krustlet. Keep the Krustlet server running. You might see some errors being logged, but let’s ignore it for now as Krustlet is still in beta, and there are some rough edges.

Let’s see if the node is available. Run kubectl get nodes, and you should see something like this:

kubectl get nodes -o wide

# Output

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kind-control-plane Ready control-plane,master 16m v1.21.1 172.21.0.2 <none> Ubuntu 21.04 5.15.12-200.fc35.x86_64 containerd://1.5.2

krustlet Ready <none> 12m 1.0.0-alpha.1 172.17.0.1 <none> <unknown> <unknown> mvp

Now let’s test if the Krustlet is working as expected by applying the Wasm workload below. As you can see, we have tolerations defined so that this will not be scheduled on normal nodes.

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: hello-wasm

spec:

containers:

- name: hello-wasm

image: webassembly.azurecr.io/hello-wasm:v1

tolerations:

- effect: NoExecute

key: kubernetes.io/arch

operator: Equal

value: wasm32-wasi # or wasm32-wasmcloud according to module target arch

- effect: NoSchedule

key: kubernetes.io/arch

operator: Equal

value: wasm32-wasi

EOF

Once applied, run kubectl get pods as shown below, and you should see the pod running on the Krustlet node.

kubectl get pods --field-selector spec.nodeName=krustlet

# Output

NAME READY STATUS RESTARTS AGE

hello-wasm 0/1 ExitCode:0 0 71m

Don’t worry about the status. It’s normal for a workload that terminates normally to have ExitCode:0. Let’s check the logs for the pod by running kubectl logs.

kubectl logs hello-wasm

# Output

Hello, World!

You have successfully set up a Kubelet that can run Wasm workloads on your cluster.

Setting up Rust for WebAssembly

Now let us prepare an environment for WebAssembly with Rust. Make sure you are using a stable Rust version and not a nightly release.

First, you need to add wasm32-wasi target for Rust so that you can compile Rust apps to WebAssembly. Run the below command:

rustup target add wasm32-wasi

Now you can create a new Rust application with Cargo.

cargo new --bin rust-wasm

Open the created rust-wasm folder in your favorite IDE. I use Visual Studio Code with the fantastic rust-analyzer and CodeLLDB plugins to develop in Rust.

Create the WebAssembly workload

Let’s write a small service that will print random cat facts on the console. For this, you could use a free public API that provides random cat facts.

Edit cargo.toml and add the following dependencies:

[dependencies]

wasi-experimental-http = "0.7"

http = "0.2.5"

serde_json = "1.0.74"

env_logger = "0.9"

log = "0.4"

Then edit src/main.rs and add the following code:

use http;

use serde_json::Value;

use std::{str, thread, time};

fn main() {

env_logger::init();

let url = "https://catfact.ninja/fact".to_string();

loop {

let req = http::request::Builder::new()

.method(http::Method::GET)

.uri(&url)

.header("Content-Type", "text/plain");

let req = req.body(None).unwrap();

log::debug!("Request: {:?}", req);

// send request using the experimental bindings for http on wasi

let mut res = wasi_experimental_http::request(req).expect("cannot make request");

let response_body = res.body_read_all().unwrap();

let response_text = str::from_utf8(&response_body).unwrap().to_string();

let headers = res.headers_get_all().unwrap();

log::debug!("{}", res.status_code);

log::debug!("Response: {:?} {:?}", headers, response_text);

// parse the response to json

let cat_fact: Value = serde_json::from_str(&response_text).unwrap();

log::info!("Cat Fact: {}", cat_fact["fact"].as_str().unwrap());

thread::sleep(time::Duration::new(60, 0));

}

}

The code is simple. It makes a GET request to the API and parses and prints the response every 60 seconds. Now you can build this into a Wasm binary using this Cargo command:

cargo build --release --target wasm32-wasi

That’s it. You have successfully created a WebAssembly binary using Rust.

Run the workload locally (optional)

Let’s run the workload locally using Wasmtime, a small JIT-style runtime for Wasm and WASI. Since Wasmtime doesn’t support networking out of the box, we need to use the wrapper provided by wasi-experimental-http. You can build it from source using the below command.

git clone https://github.com/deislabs/wasi-experimental-http.git

# Build for your platform

cargo build

# move to any location that is added to your PATH variable

mv ./target/debug/wasmtime-http ~/bin/wasmtime-http

Now run the below command from the rust-wasm project folder:

wasmtime-http target/wasm32-wasi/release/rust-wasm.wasm -a https://catfact.ninja/fact -e RUST_LOG=info

Run the workload in Kubernetes

Before you can run the workload in Kubernetes, you need to push the binary to a registry that supports OCI artifacts. OCI-compliant registries can be used for any OCI artifact, including Docker images, Wasm binaries, and so on. Docker Hub currently does not support OCI artifacts; hence you can use another registry like GitHub Package Registry, Azure Container Registry or Google Artifact Registry. I’ll be using GitHub Package Registry as it’s the simplest to get started, and most of you might already have a GitHub account.

First, you need to log in to GitHub Package Registry using docker login. Create a personal access token on GitHub with the write:packages scope and use that to log in to the registry.

export CR_PAT=<your-token>

echo $CR_PAT | docker login ghcr.io -u <Your GitHub username> --password-stdin

Now you need to push your Wasm binary as an OCI artifact; for this, you can use the wasm-to-oci CLI. Use the below command to install it on your machine. Download the appropriate version for your OS.

# Install for Linux

curl -LO https://github.com/engineerd/wasm-to-oci/releases/download/v0.1.2/linux-amd64-wasm-to-oci

# move to any location that is added to your PATH variable

mv linux-amd64-wasm-to-oci ~/bin/wasm-to-oci

Now you can push the binary you built earlier to the GitHub Package Registry. Run the below command from the rust-wasm folder.

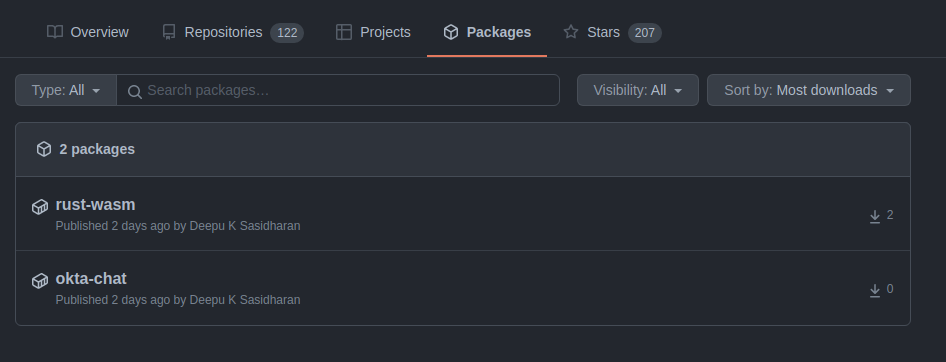

wasm-to-oci push target/wasm32-wasi/release/rust-wasm.wasm ghcr.io/<your GitHub user>/rust-wasm:latest

You should see a successful message. Now check the GitHub Packages page on your profile, and you should see the artifact listed.

By default, the artifact would be private, but you need to make it public so that you can access it from the Krustlet cluster. Click the package name and click the Package settings button, scroll down, click Change visibility, and change to public.

You can check this by pulling the artifact using the below command:

wasm-to-oci pull ghcr.io/<your GitHub user>/rust-wasm:latest

Yay! You have successfully pushed your first Wasm artifact to an OCI registry. Now let’s deploy this to the Kubernetes cluster you created earlier.

Create a YAML file, let’s say k8s.yaml, with the following contents:

apiVersion: v1

kind: Pod

metadata:

name: rust-wasi-example

labels:

app: rust-wasi-example

annotations:

alpha.wasi.krustlet.dev/allowed-domains: '["https://catfact.ninja/fact"]'

alpha.wasi.krustlet.dev/max-concurrent-requests: "42"

spec:

automountServiceAccountToken: false

containers:

- image: ghcr.io/<your GitHub user>/rust-wasm:latest

imagePullPolicy: Always

name: rust-wasi-example

env:

- name: RUST_LOG

value: info

- name: RUST_BACKTRACE

value: "1"

tolerations:

- key: "node.kubernetes.io/network-unavailable"

operator: "Exists"

effect: "NoSchedule"

- key: "kubernetes.io/arch"

operator: "Equal"

value: "wasm32-wasi"

effect: "NoExecute"

- key: "kubernetes.io/arch"

operator: "Equal"

value: "wasm32-wasi"

effect: "NoSchedule"

Note: Remember to replace <your GitHub user> with your own GitHub username.

The annotations and tolerations are significant. The annotations are used to allow external network calls from the krustlet, and the tolerations limit the pod to only schedule/run on Wasm nodes. We are also passing some environment variables that the application will use.

Now apply the manifest using the below command and check the pod status.

kubectl apply -f k8s.yaml

kubectl get pods -o wide

# Output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rust-wasi-example 1/1 Running 0 6m50s <none> krustlet <none> <none>

You should see the Wasm workload running successfully on the Krustlet node. Let’s check the logs.

kubectl logs rust-wasi-example

# Output

[2022-01-16T11:42:20Z INFO rust_wasm] Cat Fact: Polydactyl cats (a cat with 1-2 extra toes on their paws) have this as a result of a genetic mutation. These cats are also referred to as 'Hemingway cats' because writer Ernest Hemingway reportedly owned dozens of them at his home in Key West, Florida.

[2022-01-16T11:43:21Z INFO rust_wasm] Cat Fact: The way you treat a kitten in the early stages of its life will render its personality traits later in life.

Awesome. You have successfully created a Wasm workload using Rust and deployed it to a Kubernetes cluster without using containers. If you’d like to take a look at this solution in full, check out the GitHub repo.

So, are we ready to replace containers with WebAssembly?

WebAssembly on Kubernetes is not yet production-ready as a lot of the supporting ecosystem is still experimental, and WASI itself is still maturing. Networking is not yet stable and the library ecosystem is only just coming along. Krustlet is also still in beta, and there is no straightforward way to run networking workloads, especially servers on it. WasmEdge is a more mature alternative solution for networking workloads, but it’s much more involved to set up and run than Krustlet on Kubernetes. WasmCloud is another project to keep an eye on. So, for the time being, Krustlet is suitable for running workloads for jobs and use cases involving cluster monitoring and so on. These are areas where you could use the extra performance anyway.

So, while Wasm on Kubernetes is exciting, and containerless on Kubernetes is definitely on the horizon. containerized applications are still the way to go for production use. This is especially true for networking workloads like microservices and web applications. But, given how fast the ecosystem is evolving, especially in the Rust + Wasm + WASI space, soon I expect we will be able to use Wasm workloads on Kubernetes for production.

Learn more about Kubernetes and WebAssembly

If you want to learn more about Kubernetes and security in general, check out these additional resources.

- How to Secure Your Kubernetes Cluster with OpenID Connect and RBAC

- How to Secure Your Kubernetes Clusters With Best Practices

- How to Build Securely with Blazor WebAssembly (WASM)

- Securing a Cluster

- RBAC vs. ABAC: Definitions & When to Use

- Secure Access to AWS EKS Clusters for Admins

If you liked this tutorial, chances are you’ll enjoy the others we publish. Please follow @oktadev on Twitter and subscribe to our YouTube channel to get notified when we publish new developer tutorials.

Okta Developer Blog Comment Policy

We welcome relevant and respectful comments. Off-topic comments may be removed.