A Developer Guide to Reporting Vulnerabilities

Many of us are not familiar with vulnerability reporting and how it is different from reporting a regular bug. Frequently, I’ve seen people report vulnerabilities or potential security issues incorrectly. A public bug tracker or Stack Overflow is NOT the right tool; developers need to handle vulnerabilities differently and should not disclose them until the project/vendor fixes them.

In this post, you will learn basics about vulnerabilities, how they relate to Common Vulnerabilities and Exposures (CVEs), and how to report them responsibly.

If you’d rather watch a video, I created a screencast of this tutorial.

What is a Vulnerability?

Simply put, a vulnerability is any weakness that an attacker can exploit to perform unauthorized actions. This means any security issue from SQL injection to side-channel attacks and everything in between.

A CVE Numbering Authority (CNA), such as MITRE, assigns every publicly identified vulnerability a Common Vulnerabilities and Exposures (CVE) identification number. A CVE ID uses the format CVE-YYYY-NNNNN, where YYYY is the year the user assigned the vulnerability and NNNNN is a unique number, for example, CVE-2019-0564 (not all vulnerabilities get a cool name like "heartbleed"; people refer to most just by ID). A CVE also contains basic information about a vulnerability like product, version, and description.

A CVE allows multiple data sources to describe the same vulnerability; each can add additional information, such as a Common Vulnerability Scoring System (CVSS) number or how they map to a product name using Common Platform Enumeration (CPE) identifiers.

Not all CVEs are actual vulnerabilities; some people misreport issues. For example, CVE-2018-17793 was a misunderstanding of what 'virtualenv' in Python was.

|

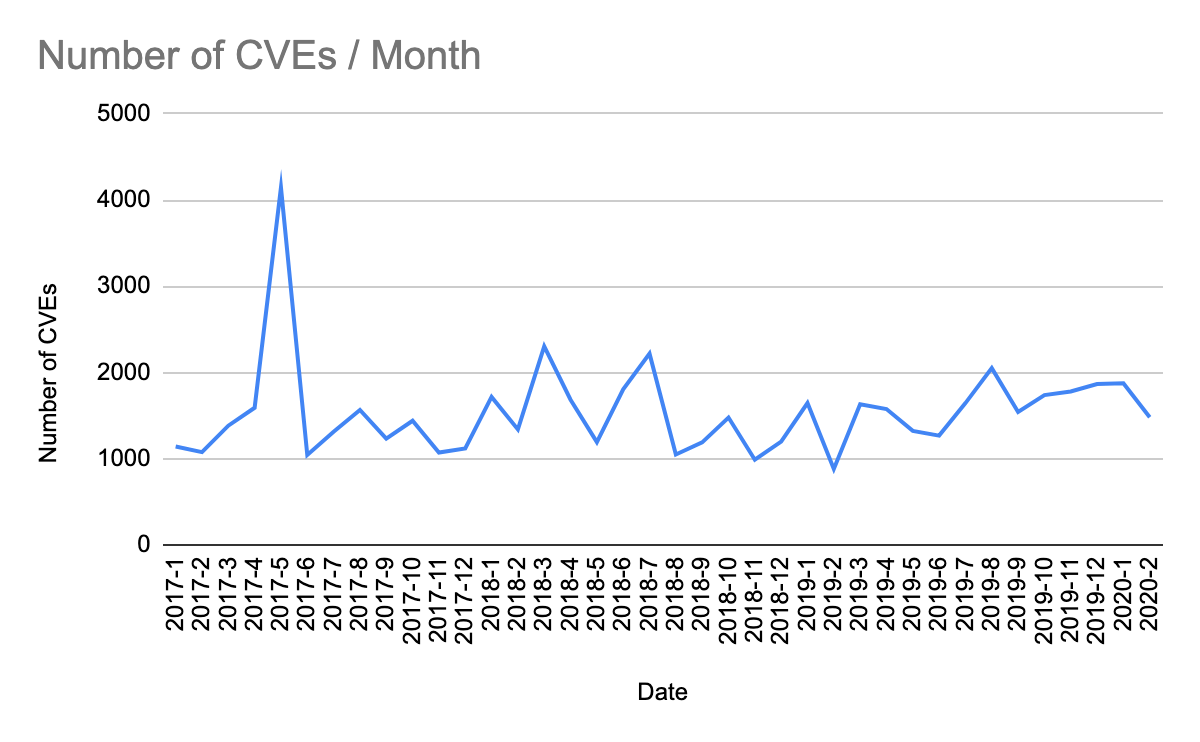

We all know software bugs are a fact of life; security-related issues are no different. Looking at the data from the past three years, you can see, on average, 1,500 CVEs are reported each month, which is more than 50 each day! Granted, not all of these are actually vulnerabilities, but since people don’t publicly report all vulnerabilities, I suspect the number is much higher.

(Data from nvd.nist.gov)

Vulnerabilities are Bad?

Vulnerabilities are bad in the same way that software bugs are bad; nobody likes them, but they do exist. We all know how to report software bugs, unlike vulnerabilities; your first job probably taught you how to fill out a bug report. Vulnerabilities are only "bad" when people handle them poorly.

I’ll pick on the same virturalenv issue (CVE-2018-17793) as before. A user originally opened this issue on GitHub. The original title for the issue was "Exploit Title: virtualenv Sandbox escape". Clearly, the reporter thought this was a vulnerability; the problem is how they reported it. Instead of reporting a security issue publicly, it’s best to give the development team time to fix the issue before telling everyone about it.

Responsible Disclosure

Responsible disclosure is a model in which a user reports a vulnerability and gives the project (or vendor) time to fix it before they release any information publicly. This model stands in contrast to full disclosure, which is "tell everyone, everything, right now." The idea of full disclosure is to give developers and system admins all the info so they can make their own decisions. The problem with full disclosure is it also gives attackers the same information, and depending on the bug, the attackers might be able to exploit the issue before the project releases a fix.

Responsible disclosure allows for a period of secrecy after an issue has been reported, known as a "security embargo." A security embargo lasts until developers release a fix/patch. Ideally, a security embargo should be as short as possible while still allowing for a quality, tested release. The actual length of an embargo is a subject of debate and depends on whom you ask. Google’s Project Zero is 90 days, whereas the Linux Kernel is two weeks (actually 19 days to allow for long weekends and holidays). Occasionally, companies grant extensions; for example, Spectre’s embargo lasted eight months before finally details leaked out.

Just like with any secret, the more people who know about it, the more likely it is to get out. The Linux Kernel is a popular project, and Linus Torvalds’s Linux kernel GitHub repo has more than 30 thousand stars! That is a lot of eyeballs watching the code; it would be hard to keep anything secret for very long.

Three may keep a secret if two of them are dead.

Report, Fix, Disclose

On to reporting the vulnerability: there is no standard way of doing this; most projects have a security page that describes their process, which could be an email alias like security@example.com or a simple web form. If you cannot find a way to report an issue, check bug bounty sites like Bugcrowd and HackerOne.

Just like with any other bug report, you must include enough information that the development team can reproduce the issue and understand the impact the issue has. You should receive a confirmation from the project along with a discussion of a timeline for a fix.

| Check out RubyGarage’s guide on How to Write a Bug Report. |

If you reported a vulnerability to an open-source project and you are interested in helping fix the issue, stay in contact with the project maintainers. You need to be careful what information you put in commit messages and pull requests, so you can ask them for guidance.

Once the developers release a fix and make it available to the public, you can disclose the vulnerability. This is the first time someone will alert the public to the issue. Most of the time, the vendor handles the disclosure, after which you can share what you learned with the world!

Tips for Making Vulnerabilities Easier to Report

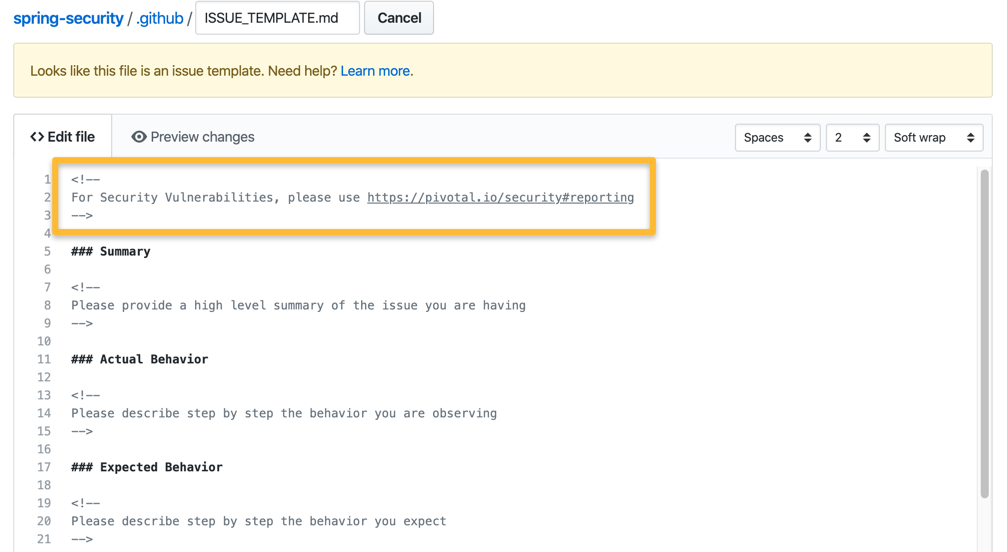

One way to make sure people don’t report vulnerabilities in your bug tracker is to warn users when they are creating issues. For GitHub projects, you can create a .github/ISSUE_TEMPLATE.md with a note about reporting security vulnerabilities elsewhere. Any time they create a new issue, the reporter sees your message. For example, Spring Security’s ISSUE_TEMPLATE looks like this:

| GitHub projects should also add a security policy. |

Another low-budget option is to create a ./well-known/security.txt file on your website with the appropriate contact information there. The site securitytxt.org even has a simple web form you can use to create one in a few seconds.

The result looks something like this:

# Please report security vulnerabilities responsibly

Contact: mailto:@security.example.com

Encryption: https://example.com/keys/my-pgp-key.txtLearn More About Security

This post showed you the importance of handling vulnerabilities differently than regular bugs. Want more security-related content for developers? Check out some of our other posts:

If you have questions, please leave a comment below. If you liked this tutorial, follow @oktadev on Twitter, follow us on LinkedIn, or subscribe to our YouTube channel.

PS: We’ve just recently launched a new [security site](https://sec.okta.com/) where we’re publishing in-depth security articles and guides. If you’re interested in infosec, please check it out. =)

Okta Developer Blog Comment Policy

We welcome relevant and respectful comments. Off-topic comments may be removed.